Abstract

The development in the field of Neural Networks has been inspired from the network of biological neurons in our brain. Neural Networks have been applied to vagarious applications. The algorithms learn from their experiences to generate the required output which is referred to as training the algorithm. Various machine based algorithms exist which satisfy the above criteria. Neural Networks have certain drawbacks such as high memory requirements which prohibit its use, and they require high processing time for large neural networks.

Introduction

Artificial Neural Network (ANN) is based on the operation of the biological neural networks which offers an efficient design and an analysis of adaptive intelligent systems for a broad range of applications in the field of Artificial Intelligence (AI).

The above diagram displays the constituents of a biological neuron. The ‘Cell Body’ performs the processing of the given input which is supplied by the ‘Dendrites’ from various neurons and the output produced is then given as input to various other neurons. This job is performed by the ‘Axon’.

Artificial Neural Network is especially useful when dealing with an application which involves large set of data. They are computational models with ability to

· learn from given set of data

· to generalize the training data

· to organize data

The purpose of current usage of the terms like AI, knowledge based systems, expert systems etc., are intended to convey that it is possible to build machines that demonstrate intelligence similar to human beings. In engineering, neural networks serve two important functions: as pattern classifiers and as nonlinear adaptive filters.

Artificial Neural Network follows an adaptive, a nonlinear approach by mapping the input values to the corresponding outcomes. After, having trained the network with sufficient amount of data the neural network based algorithm is said to have completed its learning phase and can now be applied in particular applications.

The ANN is built on a step-by –step approach which follows and implicit constraint, this process is termed as the learning rule. The learning phase can be described as follows:

Initially, a network topology is selected for a given problem statement. A large amount of data is then fed in as input in the network. The inputs are then acted upon by an activation function to deliver a corresponding output. This output is compared with the desired output; an error is generated if the corresponding output is not as per the desired outcome. The error is then fed back to the input so as to signal the network that it has generated an error and that it should now learn as to what the output should be when such an input is available.

The design of neural network architecture is mainly a trial and error process which is based on its past experiences. They also depend highly on the selection process, choice of neurons and learning algorithms. It is a challenging task of selecting the appropriate network topologies and algorithms for suitable applications.

To describe the above detail in a nutshell a ANN is a network of neurons which

· takes input from environment (neurons)

· computes the summed weight of all inputs

· performs the activation function on the summed weights

· to give the output to other neurons

Having provided an overview of the Artificial Neural Network, my work will explain the functioning of different neural network topologies along with the training methods that are used to build a machine based algorithm.

Artificial Neural Network Architecture

· The above diagram depicts an Artificial Neural Network having an n set of input units ranging from x1, x2,…..,xn .

· Each such input has a synaptic weight associated with it. The weight of each input determines the effect it will have on the output.

· Neurons having higher weights will be given more priority since their impact on the output is higher than other inputs.

· The corresponding outputs ranging from y1,y2,…yp which is the state of activation for the given state of input.

· The summing function is a linear combination of the input and the synaptic weights.

· The linear combination is expressed as: x1w1+x2w2+….+xnwn.

· The term wij indicates the weight associated with the ith node serving as an input to the jth node.

· The output of the summing function determines the output of a given state of values. During the learning process the neural networks recall the past values using the Activation Dynamics, which further adds to the network’s experience.

Mathematical Model

From the above diagram it can be inferred that there are three components of importance:

1. The synapses of the neurons, they are modeled as the weights.

2. An adder which modifies the input based on their respective weights.

3. An activation function which controls the amplitude the output of the neuron.

From the diagram the internal activity of the NN is be found to be:

vj=Sigma(w kj xj)

· The outcome yk would be the activation function on the value of Vj .

Processing units of the ANN:

· a set of processing units(neurons)

· State of activation yk, which is equivalent to output

· Connections between the units, the entity wjk which depicts the effect of the signal j has on unit k

· a propagation rule, which determines the effective input based on the given set of external inputs

· an activation function zk, which determines the new level of activation

· an external input for each unit

· a method for information gathering

· an environment within which the system must operate , providing input signals and requires error signals

The fitness of weight is inversely proportional to the summed error of examplars’ training.

Artificial Neural Network: Topologies and Learning Methods

Processing unit:

- An ANN consists of interconnected processing units. The general model of a processing unit consists of a summing part followed by and output part.

- The summing part receives N input values, weights each value, and computes a weighted sum. The weighted sum is known as the activation value .

- The output part produces a signal from the activation value.

Interconnections:

· In an ANN several processing units are interconnected according to some topology to accomplish a pattern recognition task.

· The input may come from several neurons or other external sources.

· The amount of output of one unit depends on the weight value associated with the particular unit.

· The set of N activation values of the network defines the activation state of the network at that instant. Similarly the N output values of the network defines the output state of the network at that instant.

Operations:

· In operation each unit receives an input from other connected units and/or from external source.

· A weighted sum of the inputs is computed at a given instant of time. The activation value determines the actual output from the output function unit.

· The output values and other external inputs in turn determine the activation and output state of the unit.

· The output values and other external inputs in turn determine the activation and the output states of other units.

· Activation dynamics is the activation state of the network as a function of time. It determines the trajectory of the path of the states in the state space of the network.

Update:

· The updating of the output states of all the units can be performed synchronously.

· In this case the activation values of all the inputs are computed at the same time, assuming a given output state throughout.

· In asynchronous update, each unit is updated sequentially, taking the

current output state of the network into account each time.

Training methods: ANN can be trained accordingly based on the three different types of learning methods described below

1. Supervised learning:

· The ANN is given a set of example pairs and the aim is find the function f:X->Y in the allowed class of functions that matches the examples.

· The information regarding the given input is determined by inferring the output from the desired output expected.

· The weight adjustment is determined based on the deviation of the desired output from the actual output.

· Supervised learning may be used for structural learning or for temporal learning.

· Structural learning is concerned with capturing in the weights the relationship between the given input-output patterns.

· Temporal learning is concerned with capturing in the weights the relationship between the neighboring patterns in a sequence of patterns

- Unsupervised learning:

· It discovers the features in a given set of patterns and organizes the patterns accordingly.

· There is no externally specified output in this case.

· Unsupervised learning uses mostly local information to update the weights. The local information consists of signal or activation values of the units at the either end of the connection for which the weight is being made.

- Reinforcement learning:

· There are times where the desired output for a given input is not known. Only the binary result that the output is right or wrong may be available.

· This output is known as reinforcement signal.

· As mentioned above, this signal only evaluates the output and the learning based on this method is known as reinforcement learning.

· This is in contrast with the supervised learning which is defined as learning with teacher whereas reinforcement learning is known as learning with critic.

Neural Network Topologies

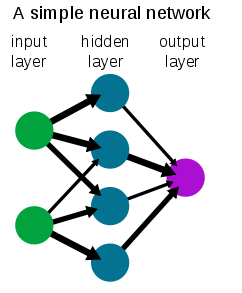

There are two network topologies that will be explored and they are:

1. Feedforward topology

· The data flow from input to output is strictly feedforward.

· No feedback is available.

· Data processing extends to multiple layers.

Feedforward based topology

2. Recurrent topology

· They do contain feedback since at times it becomes important to store the dynamic properties of the network

· In some cases, the activation values of the units undergo a relaxation process such that the neural network will evolve to a stable state in which these activations do not change anymore.

· In other applications, the change of the activation values of the output neurons are significant, such that the dynamical behaviour constitutes the output of the neural network

Recurrent based topology

Artificial Intelligence

The phrase "artificial intelligence" can be defined as the simulation of human intelligence on a machine, so as to make the machine efficient to identify and use the right piece of "Knowledge" at a given step of solving a problem.

Among the application areas of AI, we have Expert systems, Game-playing, and Theorem-proving, Natural language processing, Image recognition, Robotics and many others.

Fig A

Learning Systems:

- The concept of learning is illustrated here with reference to a natural problem of learning of pronunciation by a child from his mother (figA). The hearing system of the child receives the pronunciation of the character "A" and the voice system attempts to imitate it.

· The difference of the mother's and the child's pronunciation, hereafter called the error signal, is received by the child's learning system auditory nerve, and an actuation signal is generated by the learning system through a motor nerve for adjustment of the pronunciation of the child.

· The adaptation of the child's voice system is continued until the amplitude of the error signal is insignificantly low. Each time the voice system passes through an adaptation cycle, the resulting tongue position of the child for speaking "A" is saved by the learning process.

· The learning problem discussed above is an example of the well-known parametric learning, where the adaptive learning process adjusts the parameters of the child's voice system autonomously to keep its response close enough to the "sample training pattern".

The artificial neural networks, which represent the electrical analogue of the biological nervous systems, are gaining importance for their increasing applications in supervised (parametric) learning problems.

Applications of AI

Speech and Natural Language Understanding:

· Understanding of speech and natural languages is basically two classical problems. In speech analysis, the main problem is to separate the syllables of a spoken word and determine features like amplitude, and fundamental and harmonic frequencies of each syllable.

· The words then could be identified from the extracted features by pattern classification techniques. Recently, artificial neural networks have been employed to classify words from their features.

No comments:

Post a Comment